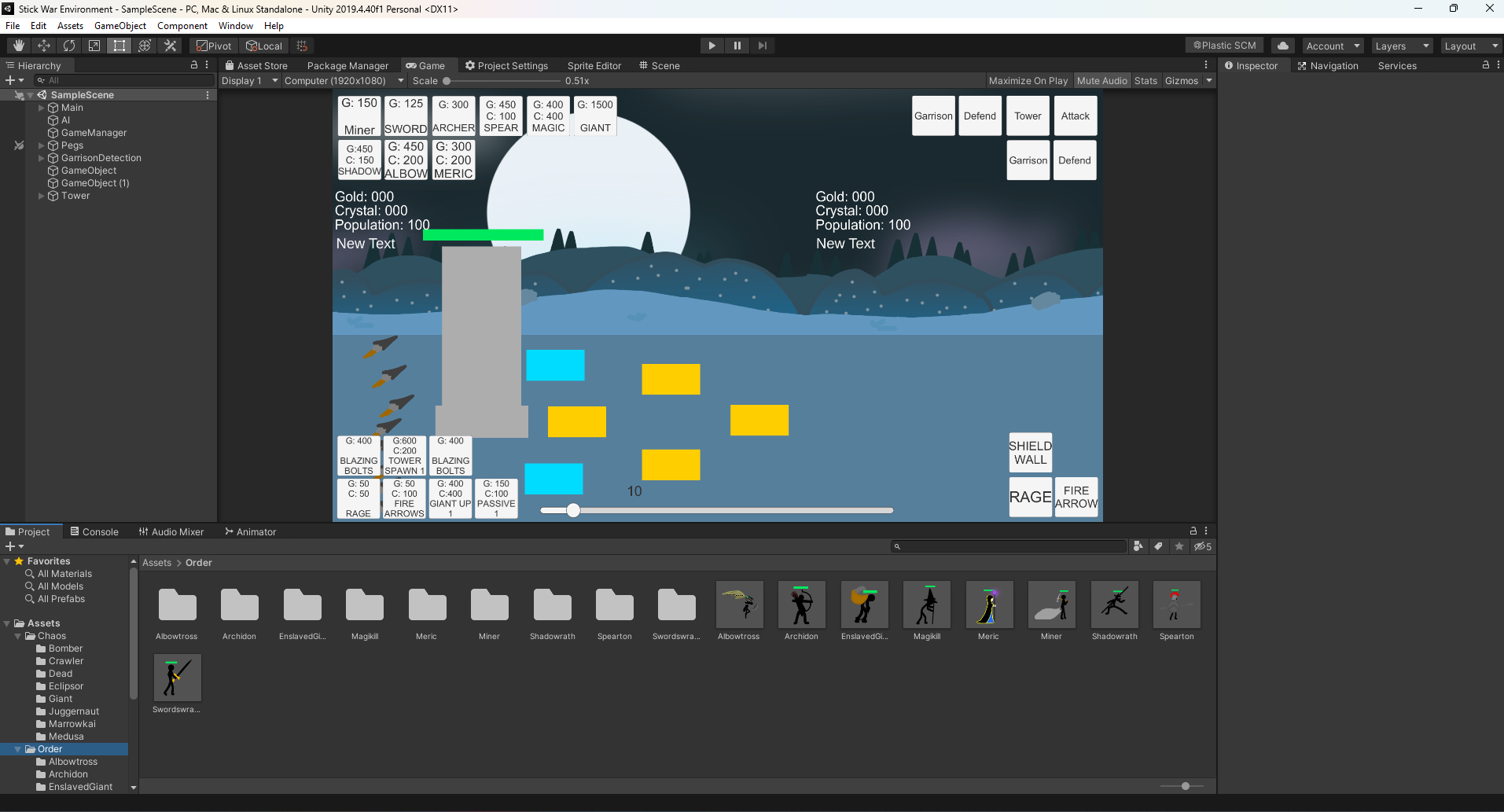

2 reinforcement learning agents play the game Stick War 2 against eachother.

Currently the 2 AI still need more training.

Devlog SourceResources

Stick War 2 DiscordInformation

Using the game engine/editor Unity, I created the environment for the agents to train in. It is almost idential to Stick War 2 (with the exepction of a removal of some of the upgrades for games and AI to go/train faster.)

To connect my environment to the 2 RL agents, I used [socket] to create threads the env and AI can request and send data through. This is how I gave the agents inputs and played their moves.

Two RL Agents were introduced to the environment, one played on the [ORDER] team and the other [CHAOS].

The model I'm using is a Dueling Double Q Network, with Prioritized Experience Replay, N-Step Returns, and Noisy Layers. This model was applied to both agents.

Because the environment is very complex, it is going to take a decent amount of time to train, and I'm going for ~30k iterations. Currently, both models have trained for 2k. (5/18/24)

Michael's Description

Stick War was probably THE game of my life (that and cartoon wars). I recall playing those 2 games when I was very young, and I still play them to this day.

So, after learning how to program deep learning models, I decided to make them play stick war. A basic DQN sucks, and I wanted the best model to play this.

It ended up taking me 1-2 years of learning all the variations to a DQN (c51, noisy net, d3qn, etc.) til I was able to combine them. Even I can't beat them now 🥲

This is basically a RAINBOW model however I removed some features of the C51 DQN because I got better results when I wasn't using it.

Visuals